Much has been written about the accelerating rate of growth of technological advancement. As humans we are used to seeing and understanding linear growth but exponential growth is harder to comprehend as are it's impacts.

In his essay the Law of Accelerating Returns, Ray Kurzweil has laid out the laws for accelerating returns:

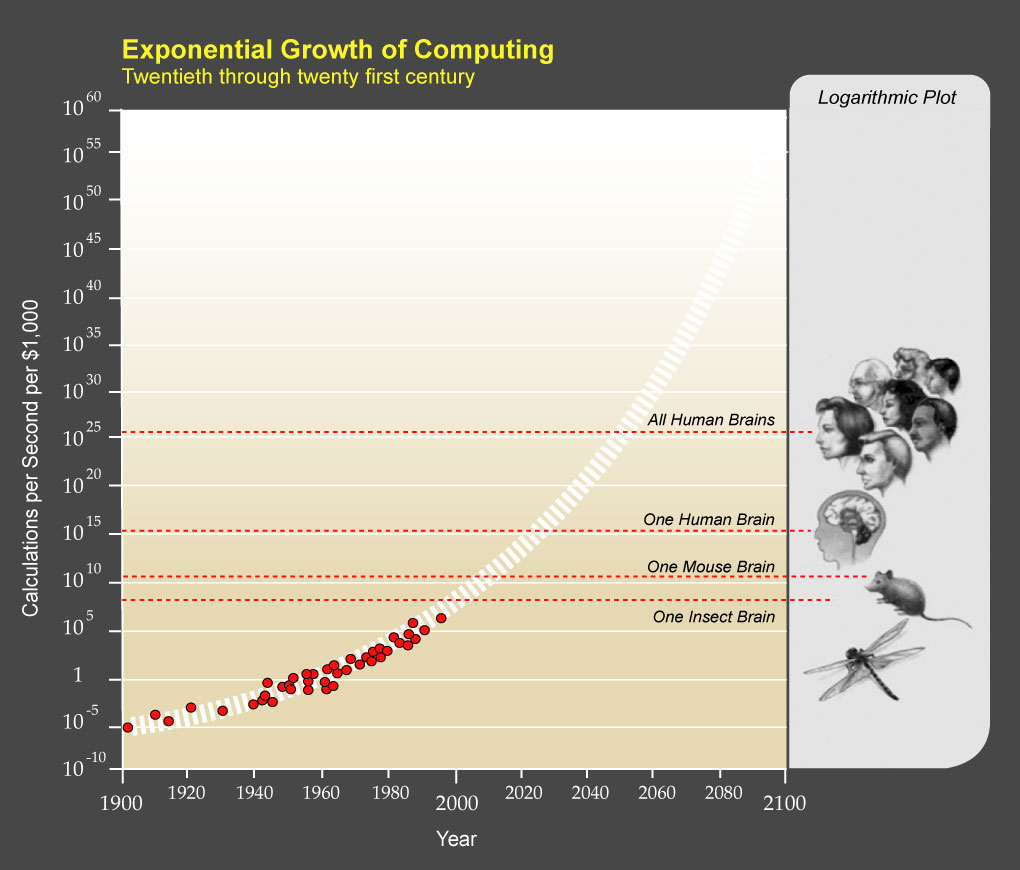

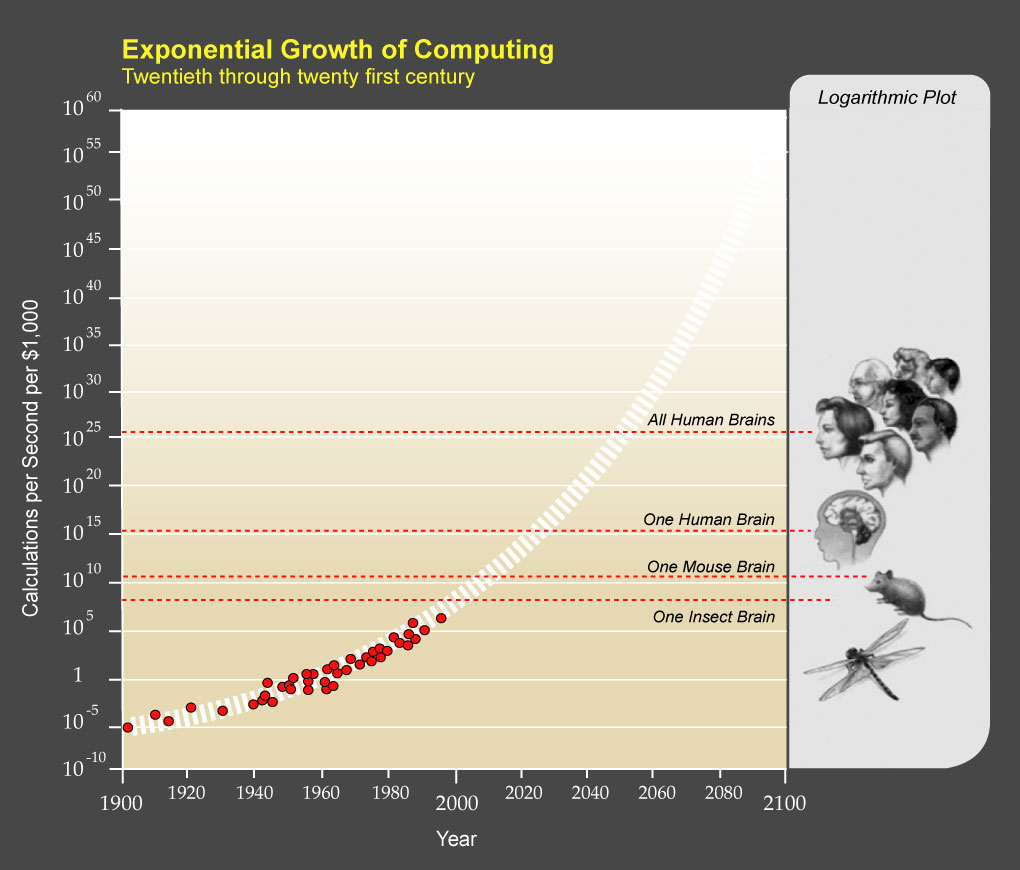

Kurzweil has developed his hypothesis by creating a number of interesting graps liek this which map out the the exponential rate of technology and specifically computing power:

Kurzweil has developed his hypothesis by creating a number of interesting graps liek this which map out the the exponential rate of technology and specifically computing power:

While there is continued debate on whether computers will ever reach the level of intelligence (which in itself is a difficult concept to define) as biological entities, the implications on our lives in unmistakable.

One aspect of his hypothesis was particularly thought provoking. Because of the exponential growth of technology, that the 21st century will have as much technological advancement as the previous 20,000 years. Many pessimists about the future often discount this acceleration and compression of technological advancement in shorter and shorter periods of time. To put it another way, we will see a century's worth of advancement in just 25 years.

In his essay the Law of Accelerating Returns, Ray Kurzweil has laid out the laws for accelerating returns:

- Evolution applies positive feedback in that the more capable methods resulting from one stage of evolutionary progress are used to create the next stage. As a result, the

- Rate of progress of an evolutionary process increases exponentially over time. Over time, the "order" of the information embedded in the evolutionary process (i.e., the measure of how well the information fits a purpose, which in evolution is survival) increases.

- A correlate of the above observation is that the "returns" of an evolutionary process (e.g., the speed, cost-effectiveness, or overall "power" of a process) increase exponentially over time.

- In another positive feedback loop, as a particular evolutionary process (e.g., computation) becomes more effective (e.g., cost effective), greater resources are deployed toward the further progress of that process. This results in a second level of exponential growth (i.e., the rate of exponential growth itself grows exponentially).

- Biological evolution is one such evolutionary process.

- Technological evolution is another such evolutionary process. Indeed, the emergence of the first technology creating species resulted in the new evolutionary process of technology. Therefore, technological evolution is an outgrowth of--and a continuation of--biological evolution.

- A specific paradigm (a method or approach to solving a problem, e.g., shrinking transistors on an integrated circuit as an approach to making more powerful computers) provides exponential growth until the method exhausts its potential. When this happens, a paradigm shift (i.e., a fundamental change in the approach) occurs, which enables exponential growth to continue.

Kurzweil has developed his hypothesis by creating a number of interesting graps liek this which map out the the exponential rate of technology and specifically computing power:

Kurzweil has developed his hypothesis by creating a number of interesting graps liek this which map out the the exponential rate of technology and specifically computing power:While there is continued debate on whether computers will ever reach the level of intelligence (which in itself is a difficult concept to define) as biological entities, the implications on our lives in unmistakable.

One aspect of his hypothesis was particularly thought provoking. Because of the exponential growth of technology, that the 21st century will have as much technological advancement as the previous 20,000 years. Many pessimists about the future often discount this acceleration and compression of technological advancement in shorter and shorter periods of time. To put it another way, we will see a century's worth of advancement in just 25 years.